Interface Application Development

Introduction

The project in this assignment is designed to help engineering or physics (and even design) students visualize how physics works within an object or larger model. The project aims to harness visual learning for students that may struggle with textbooks and give them interactivity.

Description

The theme I chose for my project was “Education” and finding new ways to increase students’ engagement.

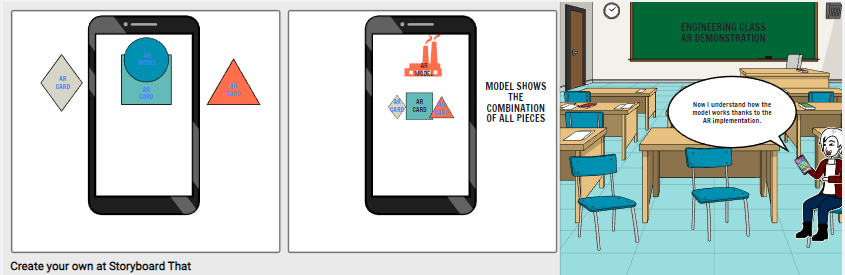

The application itself uses AR cards that represent different pieces of a physics problem/engineering object. It also has a main “base” plate which is where the completed augmented system will appear. Upon scanning the individual (non-base) cards, an image of the model’s piece will appear. In this example, three models were used, two cogwheels and a flywheel. Once the AR cards were placed near the base plate, they would appear as part of a larger model. Placing a flywheel would just sit there, as no forces are being enacted upon it. But once a small cogwheel is added, it begins spinning. This can be enhanced with the use of a larger cogwheel, which doubles the speed of the smaller cogwheel.

The sciences have moved towards visual learning where possible due to the creation of more accessible high-powered computational performance (Brown & McGrath 2005). Scientists can model DNA, atoms, and even simulate an environment in which they can test physics. Students can learn from pre-created images, but this sort of simulation technology has not been brought to classrooms in the same way scientists have access to. Given the students control of the “scientific image” allows an easier understanding of the science, rather than it being entirely out of their hands (Brown & McGrath 2005).

This technology allows the student to visualize the effects in 3D spaces, as well as allowing them to move and view the function at any angle. Whilst the models and science used in the development of this application were relatively simple, this could move to much more complex branches of science.

The goal of the project is to combine psychomotor functions, i.e., the movement of the individual pieces , with learning what the pieces can do in both the context of the application and in reality. (source2) This would also be aimed at increasing engagement because it gives the student something to physically do. Any basic form of AR, such as a head-mounted display like the HoloLens or simply a phone can be used. It also allows a simpler and more intuitive interface.

VR is also an acceptable design for this application, but VR requires a headset which is often harder for schools to source than phones. AR also allows the teacher to see what the students are doing, and teach outside, rather than inside the enclosed head-mounted-display for VR.

Interaction Design

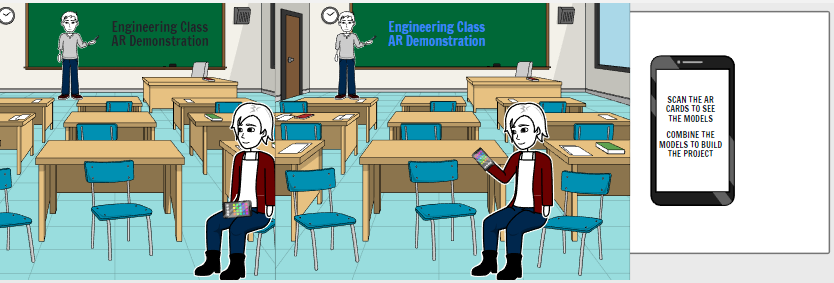

( Created with Storyboard That)

The proposed interaction of this software was to create a larger model from smaller provided pieces. As I personally found it much easier to understand physics and engineering concepts with visual examples, I wondered how I could put this into practice for learning. Building or taking apart things on your own can really help to identify the uses of parts. I was inspired by some Certificate II Automotive courses allowing students to actually tear down an engine, with visual learning being the key.

Using the AR cards to show visually what they represent, as well as combining with the larger model was only part of the intended design. A fully fleshed out design would see directly showing explanations on each card, as well as explanations on the model itself. The plan was to also have buttons that one could press to alter what was happening in the scene.

The AR cards also were planned to have full images of the model on them. This proved difficult, as the images were too similar to each other, and also far too simplistic for the Vuforia system to pick up properly. These ended up being swapped out for a generated image that Vuforia can use their integrated system of using sharp edges to locate.

The chosen interaction of viewing the cards to see the models was chosen to make the software easier to use and more engaging. If the user could see the pieces they were using, then they could decide which to use to create the correct model. The use of the distance movement interaction was used particularly because it allowed more “human-like” interaction. The physical movement of placing the object on the model is human-like especially compared to modelling software where a simple click is all that may be needed.

Technical Development

Unity was used for development of this application, with the Vuforia package added for Augmented Reality functionality. Any Android phone running version 12.0 or above should be able to run the application. The application uses the phone’s camera to place models in the world.

https://www.brosvision.com/ar-marker-generator/ was used to generate the images for the AR cards.

The application can be engaged with via first placing down the AR card labelled “base”. The user can then engage by viewing the other cards in the camera to see what they represent. Following this, the user can place it near the base plate to visually show the object in action.

Description of 3D Models

Small Cogwheel: A small cogwheel built based on the tutorial by Blender Share (found here: https://www.youtube.com/watch?v=qrYngJmrEFA). I modelled this myself based on the tutorial, adapting it to my needs. Based on a real cogwheel, with gears for it to spin, and a hole for the axle to be placed inside.

Large Cogwheel: A larger cogwheel built based on the tutorial by Blender Share (found here: https://www.youtube.com/watch?v=qrYngJmrEFA). Simply a larger version of the previous cogwheel.

Flywheel: Flywheel I modelled based on several images I found of what a flywheel looks like. Flywheels store rotational energy to be used in things such as engines.

References:

Software Used:

Blender Foundation 2023, Blender https://www.blender.org

Brosvision 2013, Augmented Reality Marker Generator <https://www.brosvision.com/ar-marker-generator/>

Storyboard That 2023, Storyboard That <https: www.storyboardthat.com=""> </https:>

Unity Technologies 2022, Unity <https: unity.com=""> </https:>

Vuforia 2023, Vuforia Augmented Reality SDK

Scripts Used:

OnImageCollisionHandlerTemplate adapted from:

Wells L, 2023, OnImageCollisionHandlerTemplate

Tutorials Used For Models:

Blender Share 2017, Modeling a Basic Gear in Blender 2.78 - Basic Modeling Tutorials For Beginners <https://www.youtube.com/watch?v=qrYngJmrEFA> (cogwheel adapted from this tutorial)

Academic Resources:

McGrath M, Brown J 2005, ‘Visual Learning for Science and Engineering’, IEEE computer graphics and applications [IEEE Comput Graph Appl] 2005 Sep-Oct; Vol. 25 (5), pp. 56-63. DOI: 10.1109/MCG.2005.117

Furness, T.A. (2001). ‘Toward Tightly Coupled Human Interfaces’, Frontiers of Human-Centered Computing, Online Communities and Virtual Environments. DOI: 10.1007/978-1-4471-0259-5_7

Neither ChatGPT nor GitHub Copilot was used in the making of this assignment.

Leave a comment

Log in with itch.io to leave a comment.